Published on October 14th, 2025

Today I learnt an important lesson:

Context

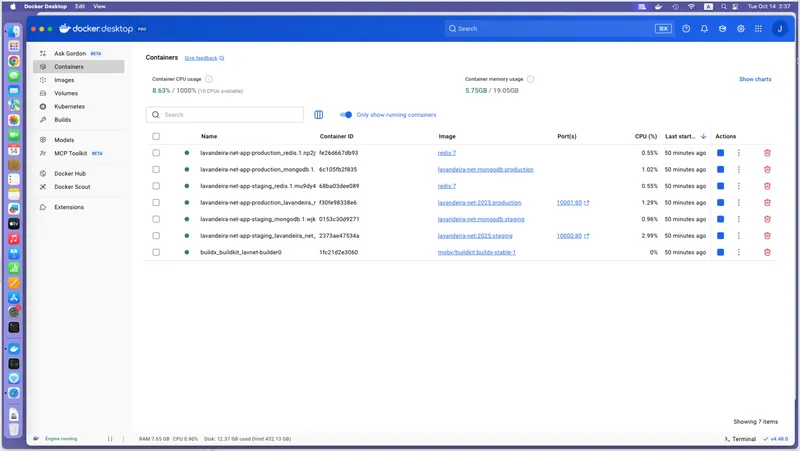

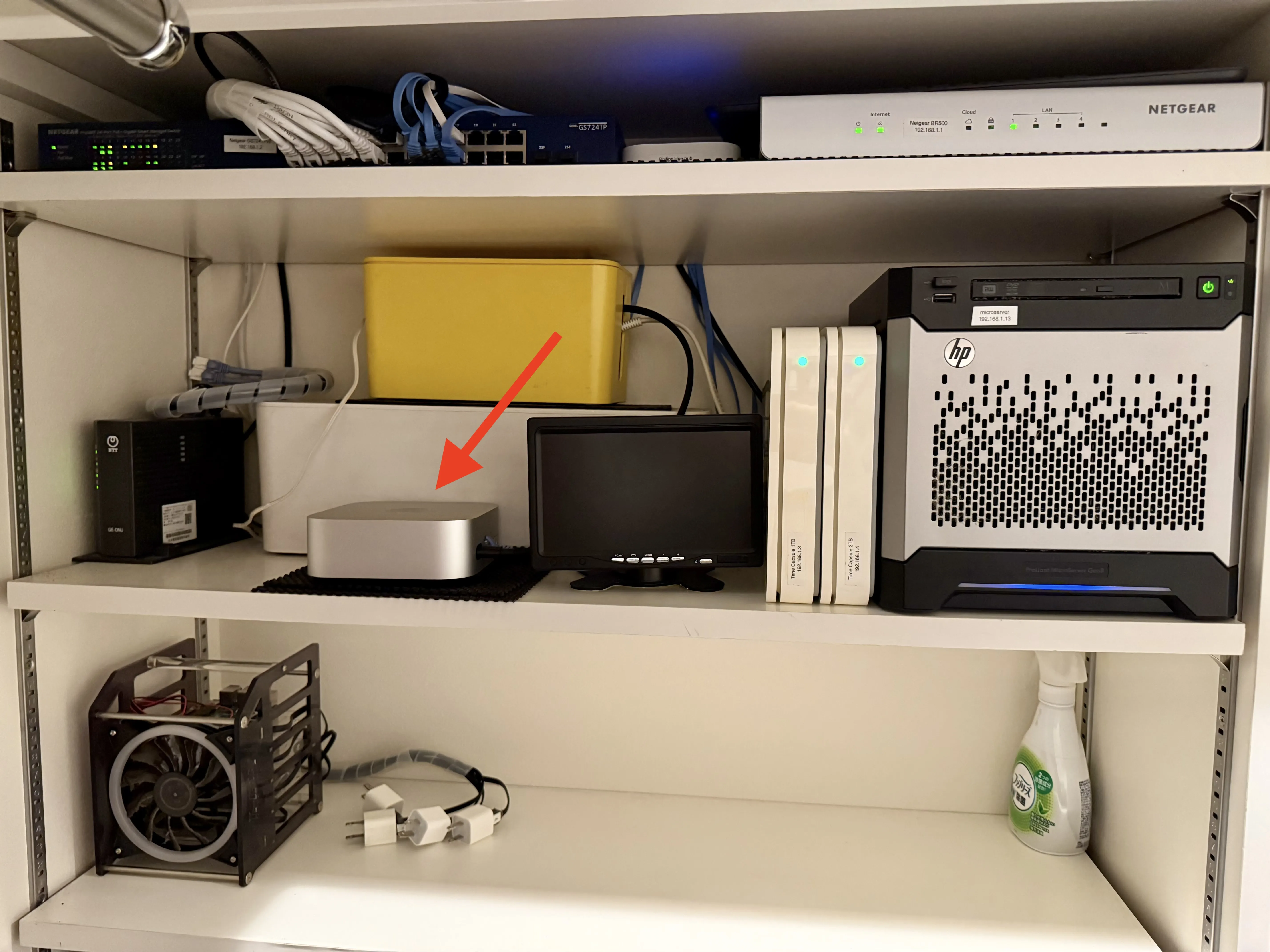

As some of you may know, I have a small home lab with a few servers and network devices. This is where I work on the web applications that I enjoy building on my free time, and I host these web apps here until they become more mature. Then I move them to AWS.

My website (the one you're looking at right now) is hosted here, among a number of other things. To be specific, it's running on this new Mac mini in its own Docker Swarm.

The application containers are stateless, so they can be launched and destroyed as needed based on service load without losing any data. However, the database containers (or, to be specific, the database containers' volumes) aren't.

I don't like losing databases, so I set up Time Machine on the Mac mini to back up the whole server over the network to one of the two old Time Capsules. Not that I often need to rely on backups, but I like to have the peace of mind of having backups.

I thought that having consistent backups of the server would be enough.

I was wrong.

So what happened?

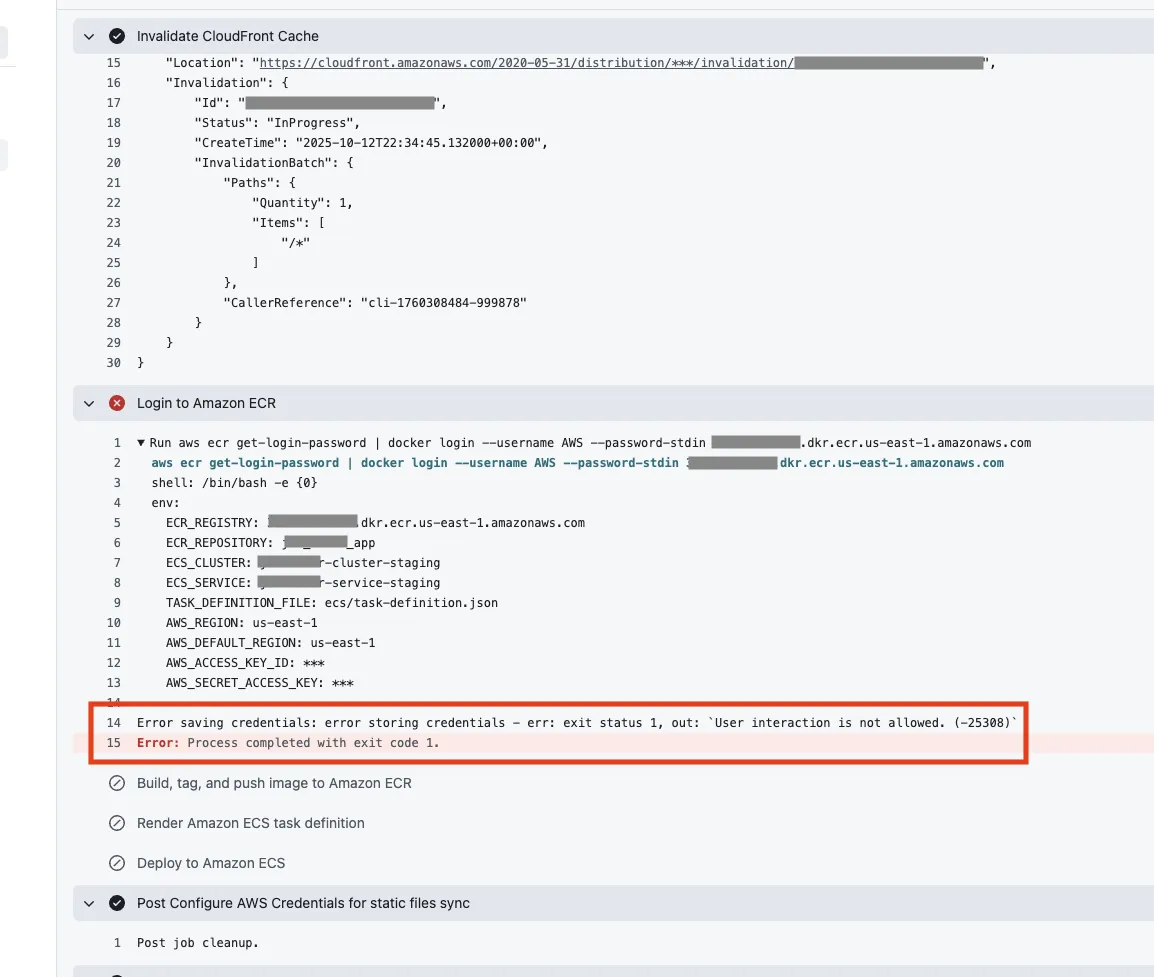

Yesterday I was tinkering with the Docker Desktop configuration in order to fix a Mac-specific issue when deploying another of my applications to AWS ECS using a GitHub Actions workflow:

Based on what I read online, this happens because Docker Desktop for macOS tries to store these credentials using the macOS Keychain, and this doesn't work in a non-interactive workflow because it requires the user to enter the keychain password interactively.

I found some document online describing how to update Docker Desktop's configuration to prevent it from using the keychain, so I changed the configuration, restarted the Docker engine, and... it failed to restart.

Long story short: the solution recommended online was to completely uninstall Docker Desktop and all its data (container images, configuration files, volumes), then reinstall and rebuild. I thought that was too much work, because after all I had Time Machine backups from earlier in the day, right? I could just quickly restore a backup from a couple hours ago and it would be quicker, right?

So that's what I did: I restored the server from a backup from a snapshot from earlier that night. The server booted, Docker started... and there were no containers, images or volumes. 😱

This is where I panicked, thinking that I had lost all the content I had entered in this site database: blog posts, projects, photo galleries, articles...

Luckily I happened to have a dump of the database that I took a few days earlier, and I was able to rebuild the database from there. I only lost a couple of short blog posts that I wrote these last few days, and a couple of project entries that I can easily rewrite.

What's next?

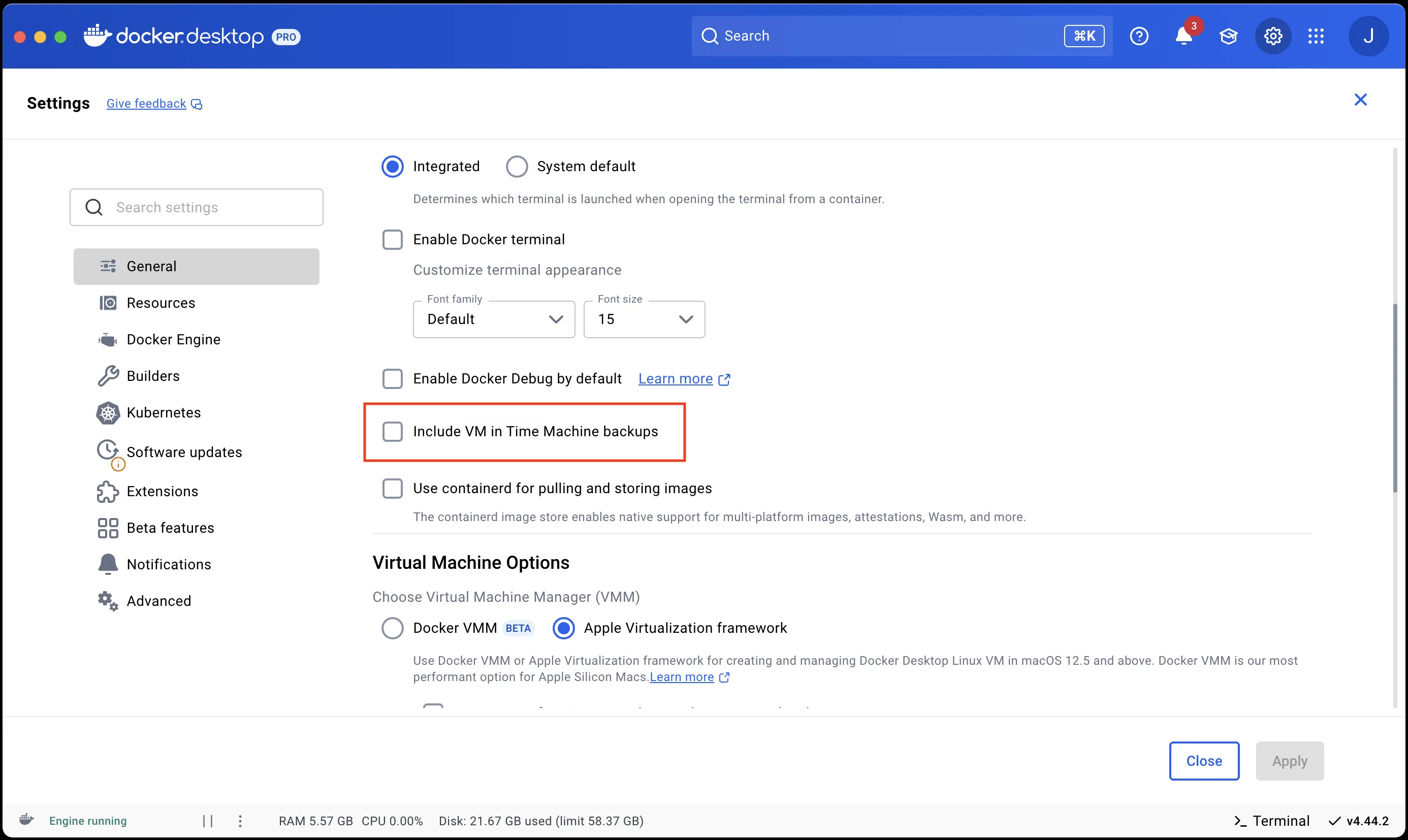

It turns out that Docker Desktop has a checkbox to Include the VM in Time Machine backups. I've enabled it, but I don't trust it.

For now I will just write a quick script to dump the database daily to an S3 bucket or an NFS volume, and when I'm not busy I'll move the application to AWS ECS with the other stuff I have in there, and that will save me the pain of having to deal with the self-hosted GitHub Actions runner on the macOS host.

Anyway, the takeaway from this is: